Store JSON data in a table, OPENJSON and JSON_Value functions | SQL Server 2016 – Part 4

In my previous posts I talked about how to [export] a Table or Query data into JSON string format, and [read it back] from JSON string to Relational-table format, and with [nested elements].

Here, in this post I’ll show how we can store JSON data in a normal table column, just like you store XML data.

XML data is stored in a column of XML datatype which also check the validity of the XML data to be stored. But to store JSON data there is no new datatype introduced, JSON can be stored in an NVARCHAR datatype column just like a plain text, and to validate it you can add a CHECK constraint on it.

IsJSON() function: can be used as a CHECK constraint on the columns that contain JSON string which will validate if the JSON string is in proper format or not.

As we will need AdvantureWorks2014 Sample Database in our example below, we need to upgrade its Compatibility from SQL 2014 to SQL 2016, i.e. from level 120 to 130, like:

USE [master] GO ALTER DATABASE [AdventureWorks2014] SET COMPATIBILITY_LEVEL = 130 GO

You can download AdvantureWorks2014 sample Database from Microsoft [CodePlex site].

–> Ok, now let’s create a new Table with OrderDetailsJSON column for storing JSON string with a CHECK constraint on it:

USE [AdventureWorks2014] GO CREATE TABLE CustomerOrder ( BusinessEntityID INT, FirstName NVARCHAR(50), MiddleName NVARCHAR(50), LastName NVARCHAR(50), EmailPromotion INT, OrderDetailsJSON NVARCHAR(MAX) -- normal column with NVARCHAR datatype CHECK ( IsJSON ( OrderDetailsJSON ) = 1 ) -- CHECK Constraint to validate JSON string )

–> Let’s create a sample record-set with JSON data in OrderDetailsJSON column. We will use FOR JSON AUTO option to convert relational data to JSON string for our example, as shown below:

;WITH CTE_PersonContact AS ( SELECT BusinessEntityID, FirstName, MiddleName, LastName, EmailPromotion, OrderDetailsJSON = ( SELECT SalesOrderID, OrderDate, SubTotal, TaxAmt, TotalDue FROM [AdventureWorks2014].[Sales].[SalesOrderHeader] S WHERE S.CustomerID = P.BusinessEntityID FOR JSON AUTO -- here ) -- our JSON column FROM [Person].[Person] P ) INSERT INTO CustomerOrder SELECT BusinessEntityID, FirstName, MiddleName, LastName, EmailPromotion, OrderDetailsJSON FROM CTE_PersonContact WHERE OrderDetailsJSON IS NOT NULL -- (9778 row(s) affected)

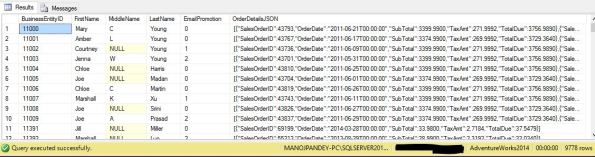

–> Check the above inserted records with the OrderDetailsJSON column containing data in JSON format:

SELECT * FROM CustomerOrder -- (9778 row(s) affected)

–> Let’s Query back the JSON data from the OrderDetailsJSON column with other columns in relational form, by using OPENJSON() function. As for each Customer it can contain multiple orders we will get multiple rows for each Customer and multiple columns as per defined in the JSON string:

SELECT C.BusinessEntityID, C.FirstName, C.MiddleName, C.LastName, C.EmailPromotion, J.SalesOrderID, J.OrderDate, J.SubTotal, J.TaxAmt, J.TotalDue FROM CustomerOrder C CROSS APPLY OPENJSON (OrderDetailsJSON) WITH ( SalesOrderID INT, OrderDate DATETIME, SubTotal MONEY, TaxAmt MONEY, TotalDue MONEY ) AS J -- (17463 row(s) affected)

–> And if you want to get just one Order per Customer then you can use following Query, by using JSON_Value() function and by specifying the array key pointer/position to get the first value fro the array:

SELECT C.BusinessEntityID, C.FirstName, C.MiddleName, C.LastName, C.EmailPromotion, SalesOrderID = JSON_Value (OrderDetailsJSON, '$[0].SalesOrderID'), OrderDate = JSON_Value (OrderDetailsJSON, '$[0].OrderDate'), SubTotal = JSON_Value (OrderDetailsJSON, '$[0].SubTotal'), TaxAmt = JSON_Value (OrderDetailsJSON, '$[0].TaxAmt'), TotalDue = JSON_Value (OrderDetailsJSON, '$[0].TotalDue') FROM CustomerOrder C -- (9778 row(s) affected)

We can also use JSON string for passing multiple values from a single parameter in an Stored Procedure to implement dynamic parameters functionality as a workaround, check the [blog post] for the same.

Hi Manoj,

I have written a procedure which reads all .txt files under a folder path

C:\JSON\sample.txt

But when there is a pattern like ‘C:\JSON\sample.Json

There is a serious problem in defining the delimiters.

What is the ideal solution in picking up dynamic files which comes like *.json and load it into my DB Table.

Thanks,

Hari

Thank you, Manoj, your article has done me a big favor, solved my JSON problem, Hongming from China

I have a much simpler problem, but it is proving to be difficult because my json array is “simple’, i.e., values which are not named.

This is legal JSON and ISJSON() agrees

[ “Tom”, “Dick”, “Harry”]

I want to convert to a table – ideally table variable – with one VARCHAR column such as

Tom

Dick

Harry

How?

Girf, sorry to get back to you so late. You can check this post for your problem: https://sqlwithmanoj.com/2015/10/31/passing-multipledynamic-values-to-stored-procedures-functions-part-5-by-passing-json-string/

Hi Manoj

I have this data. I am not able to produce it in table format. As you can see for a particular id there are multiple markets and multiple TargetTS as well. However when i am trying to cross join it is giving me NULL values. I want to make my code dynamic so that if the markets change for a particular id, the code is able to format it automatically.

{

“unit”:{

“id”:”0000-000-001″},

“markets”:[{“market_name”:”ABC”,”confidence”:{“value”:0.0,”reason”:”Confidence”},”target_summary”:{“num_stores_sold”:70},”targetTS”:{“201923”:{“volume”:114.01351768347274,”stock”:2097.0,”gt”:{“version”:3,”state”:”PROPOSED”,”quantity”:2212}},”201924″:{“volume”:353.98737200392969,”stock”:1743.0},”201925″:{“volume”:403.44161195025242,”stock”:1340.0},”201926″:{“volume”:413.45851448612189,”stock”:926.0},”201927″:{“volume”:366.36272496487959,”stock”:999.0,”gt”:{“version”:3,”state”:”PROPOSED”,”quantity”:439}},”201928″:{“volume”:339.31262927381209,”stock”:659.0},”201929″:{“volume”:107.83728824184725,”stock”:552.0},”201930″:{“volume”:82.9767722724262,”stock”:469.0},”201931″:{“volume”:26.628407405806755,”stock”:442.0},”201932″:{“volume”:23.387216508120012,”stock”:419.0},”201933″:{“volume”:20.540541071878234,”stock”:398.0},”201934″:{“volume”:17.303263133710622,”stock”:381.0},”201935″:{“volume”:14.100802037413823,”stock”:367.0},”201936″:{“volume”:11.496082703451776,”stock”:355.0},”201937″:{“volume”:9.6212527893938,”stock”:345.0},”201938″:{“volume”:8.1897770487294839,”stock”:337.0},”201939″:{“volume”:6.8490356175461615,”stock”:330.0},”201940″:{“volume”:6.0796871411212061,”stock”:324.0},”201941″:{“volume”:5.2301697540754812,”stock”:319.0},”201942″:{“volume”:4.3545727859220404,”stock”:315.0},”201943″:{“volume”:3.5090359703715182,”stock”:311.0},”201944″:{“volume”:3.0514472959371925,”stock”:308.0},”201945″:{“volume”:2.6095033090989688,”stock”:306.0},”201946″:{“volume”:2.1555980109669584,”stock”:303.0},”201947″:{“volume”:1.8209409797683762,”stock”:302.0},”201948″:{“volume”:1.6092960725063015,”stock”:300.0},”201949″:{“volume”:3.5625662730358223,”stock”:296.0},”201950″:{“volume”:1.1108013152385212,”stock”:295.0},”201951″:{“volume”:0.99463744263056564,”stock”:294.0},”201952″:{“volume”:0.827501235094082,”stock”:294.0},”202001″:{“volume”:0.79628709042571666,”stock”:293.0},”202002″:{“volume”:0.68683909060233872,”stock”:292.0},”202003″:{“volume”:0.61143656244191991,”stock”:291.0},”202004″:{“volume”:0.54754665058995255,”stock”:291.0}},”Info”:{“ancestors”:{}}},{“market_name”:”DEF”,”confidence”:{“value”:0.0,”reason”:”Confidence”},”target_summary”:{“num_stores_sold”:100},”targetTS”:{“201923”:{“volume”:223.64706388160459,”stock”:2315.0,”gt”:{“version”:3,”state”:”PROPOSED”,”quantity”:2539}},”201924″:{“volume”:429.45568971024983,”stock”:1885.0},”201925″:{“volume”:472.8916575227048,”stock”:1412.0},”201926″:{“volume”:448.8460632044513,”stock”:964.0},”201927″:{“volume”:142.33414128684748,”stock”:821.0},”201928″:{“volume”:134.45762247532772,”stock”:687.0},”201929″:{“volume”:46.416559248135194,”stock”:640.0},”201930″:{“volume”:47.328721113508408,”stock”:593.0},”201931″:{“volume”:46.416559248135194,”stock”:547.0},”201932″:{“volume”:40.6631946771834,”stock”:506.0},”201933″:{“volume”:34.783269089783332,”stock”:471.0},”201934″:{“volume”:31.863024206587031,”stock”:439.0},”201935″:{“volume”:28.834192071036323,”stock”:410.0},”201936″:{“volume”:24.914283689315916,”stock”:385.0},”201937″:{“volume”:19.711252094580718,”stock”:366.0},”201938″:{“volume”:16.474561612744843,”stock”:349.0},”201939″:{“volume”:13.983894186849252,”stock”:335.0},”201940″:{“volume”:11.583730797353994,”stock”:324.0},”201941″:{“volume”:9.7901099588737228,”stock”:314.0},”201942″:{“volume”:7.6518378063200041,”stock”:306.0},”201943″:{“volume”:5.8723090772696258,”stock”:300.0},”201944″:{“volume”:4.91463508857738,”stock”:296.0},”201945″:{“volume”:4.0970604490544948,”stock”:291.0},”201946″:{“volume”:3.4136240802035558,”stock”:288.0},”201947″:{“volume”:2.9226349796216908,”stock”:285.0},”201948″:{“volume”:7.5009364931664972,”stock”:278.0},”201949″:{“volume”:20.005967634476239,”stock”:258.0},”201950″:{“volume”:1.97349540513996,”stock”:256.0},”201951″:{“volume”:5.1931778040998653,”stock”:250.0},”201952″:{“volume”:1.6656722689711361,”stock”:249.0},”202001″:{“volume”:1.6250156660876478,”stock”:247.0},”202002″:{“volume”:1.4928791062366464,”stock”:246.0},”202003″:{“volume”:1.5368417696264856,”stock”:244.0},”202004″:{“volume”:1.4154851913381397,”stock”:243.0}},”Info”:{“ancestors”:{}}},{“market_name”:”GHI”,”confidence”:{“value”:0.0,”reason”:”Confidence”},”target_summary”:{“num_stores_sold”:140},”targetTS”:{“201923”:{“volume”:163.29768853206477,”stock”:2066.0,”gt”:{“version”:3,”state”:”PROPOSED”,”quantity”:2230}},”201924″:{“volume”:366.13212173187515,”stock”:1700.0},”201925″:{“volume”:388.26587808421186,”stock”:1312.0},”201926″:{“volume”:319.60885647254815,”stock”:992.0},”201927″:{“volume”:267.38487656342357,”stock”:725.0},”201928″:{“volume”:85.684027984971578,”stock”:639.0},”201929″:{“volume”:23.945147766897133,”stock”:615.0},”201930″:{“volume”:19.978866667707802,”stock”:595.0},”201931″:{“volume”:17.525159726972646,”stock”:578.0},”201932″:{“volume”:14.013685289397873,”stock”:564.0},”201933″:{“volume”:11.862858347363904,”stock”:552.0},”201934″:{“volume”:10.011652886972287,”stock”:542.0},”201935″:{“volume”:8.1585450059417983,”stock”:534.0},”201936″:{“volume”:7.1864302476315975,”stock”:526.0},”201937″:{“volume”:5.7872870197567305,”stock”:521.0},”201938″:{“volume”:4.9775887293202388,”stock”:516.0},”201939″:{“volume”:4.27263054898214,”stock”:511.0},”201940″:{“volume”:3.8371481832662688,”stock”:508.0},”201941″:{“volume”:3.2363073780159768,”stock”:504.0},”201942″:{“volume”:2.8190191632483148,”stock”:502.0},”201943″:{“volume”:2.0976668976716688,”stock”:499.0},”201944″:{“volume”:1.6918755921488053,”stock”:498.0},”201945″:{“volume”:1.3938969113437323,”stock”:496.0},”201946″:{“volume”:1.2196155459214133,”stock”:495.0},”201947″:{“volume”:1.0448058426332238,”stock”:494.0},”201948″:{“volume”:0.94909233580243368,”stock”:493.0},”201949″:{“volume”:2.7254522748392853,”stock”:490.0},”201950″:{“volume”:0.91070665645504345,”stock”:490.0},”201951″:{“volume”:0.75427261808958723,”stock”:489.0},”201952″:{“volume”:0.74178278370107587,”stock”:488.0},”202001″:{“volume”:0.68379788164673427,”stock”:487.0},”202002″:{“volume”:0.52324476692926469,”stock”:487.0},”202003″:{“volume”:0.53609503178780549,”stock”:486.0},”202004″:{“volume”:0.49665941177957657,”stock”:486.0}},”Info”:{“ancestors”:{}}}],”meta”:{“login”:”Simply”,”env”:”INTERNAL”}}

Hi Manoj

DECLARE @Responsedetails VARCHAR(MAX)

–insert into [tickit_health].[responsedata] (person_id,survey,date,question_id,questiontext,responsetext)

SELECT @Responsedetails = BulkColumn FROM OPENROWSET(BULK’T:\DataServices\SampleJSON.json’, SINGLE_BLOB) JSON

select

using the query i do get desired results but when i want to insert it in a table it gives an error like

An INSERT statement cannot contain a SELECT statement that assigns values to a variable.

please help me

You will have to apply INSERT statement after OPENROWSET() statement, before the last SELECT statement.