SQL Server 2025 Private Preview – Built-in AI, Fabric connectivity, Native JSON, and many more…

On 19th November 2024 Microsoft announced the Private Preview of SQL Server upcoming version i.e. SQL Server 2025 !!!

This v-Next version emphasizes modern AI and Generative AI (GenAI) capabilities powered by Microsoft Copilot and contemporary Large Language Models (LLMs). These capabilities align with the latest advancements in GenAI, such as OpenAI’s ChatGPT, Meta’s Llama, etc. It also integrates Semantic Kernel, LangChain, and Retrieval-Augmented Generation (RAG) functionalities, positioning it as an enterprise-ready AI database !!!

>> Built-in AI:

– SQL Server 2025 will be having Vector Database capabilities

— New native Vector datatype

— Vector search capabilities by using new vector functions VECTOR_DISTANCE() for calculating euclidean, cosine, and negative dot distance between two vectors.

— Vector index powered by DiskANN (Disk based Approximate Nearest Neighbor)

— I will be posting more about this topic in my upcoming blogs…

– Can call REST APIs with sp_invoke_external_rest_endpoint system Stored Procedure for calling LLMs

– Semanic Kernel integration, for semantic search capabilities for more intuitive data retrieval and analysis

– LangChain integration, by enabling seamless interaction between natural language queries and structured database operations

>> Fabric Mirroring:

– Mirroring replicates databases to Fabric with zero ETL

– Data is replicated into One Lake and kept up-to-date in near real-time

– Mirroring protects operational databases from analytical queries

– Compute replication is included with your Fabric capacity for no cost.

– Free Mirroring Storage for Replicas tiered to Fabric Capacity.

>> Other Database engine new features:

– Native JSON datatype, index, and functions

– Regular Expression (Regex) support, with new functions used at CHECK constraint, WHERE clause, SELECT list

– Copilot/GenAI in SSMS (Ver 21) for SQL query/code completions and recommendations

– Enhancements in Performance and Scalability

– Optimized locking for reducing memory lock consumption and minimizing concurrent transactions blocking through Transaction ID (TID) Locking and Lock After Qualification (LAQ)

– Real time event streaming to Azure Event Hubs and Kafka, by capturing and publishing incremental changes to data and schema

>> SQL Server setup – Feature selection:

Signup for the SQL Server 2025 Private Preview program here: https://aka.ms/sqleapsignup

>> References:

– https://www.microsoft.com/en-us/sql-server/blog/2024/11/19/announcing-microsoft-sql-server-2025-apply-for-the-preview-for-the-enterprise-ai-ready-database/

– https://www.linkedin.com/pulse/announcing-sql-server-2025-bob-ward-6s0hc/

– https://ignite.microsoft.com/en-US/sessions/BRK195

Azure SQL Database in Fabric Public Preview

The SQL Server and Azure SQL team is revolutionizing AI app development with the Public Preview of SQL Database in Fabric which was announced at the Microsoft Ignite conference. Try it for free today!

With SQL Database in Fabric, you can:

- Accelerate AI app development with speed, simplicity, and enhanced security.

- Unify operational and analytics workloads on a single, seamless platform.

- Leverage an autonomous, optimized, and secure design tailored for the AI era.

- SQL database in Fabric lands all your data in OneLake in near-real time for analytics and AI

- Get started for free – no charges until January 1, 2025!

Key Highlights:

- Simple: Deploy databases in seconds, integrate seamlessly with OneLake, and leverage AI-assisted tools like Copilot.

- Autonomous: Automatic scaling, optimization, and high availability.

- Optimized for AI: Native vector and RAG support, API-driven model calls, and integration with Azure AI services and Azure AI Foundry.

- Compatibility with popular frameworks such as LangChain and Semantic Kernel.

Learning:

We have multiple resources to help you, and your teams swiftly ramp up on Fabric Databases:

- Explore the documentation and build with our end-to-end tutorials

- Skill up with the new Fabric Databases learn modules

- Watch new Data Exposed episodes starting Thursday, November 21st

- Watch the new Microsoft Mechanics episode dropping this week

- Get the latest product news here on the Fabric updates blog

- Join the community for a free live learning series starting on December 3rd

We would like to extend a HUGE THANK YOU to to all the customers who participated in the SQL Database in Fabric Private Preview and shared their valuable feedback with us!

“Generative AI”, my take after finishing a course

I just finished a course about “What Is Generative AI?” on LinkedIn. Here are some of my Key Takeaways:

1. Role of Generative AI: Generative AI is described as a tool that is changing how humans create. Its designed to generate new content or data that is similar to existing patterns. It’s used for tasks such as image synthesis, text generation, and creating realistic content.

2. Importance and Evolution: With Generative AI we no longer need artistic talent to draw or to sing. We can access now concise information in just a manner of seconds. We can also automatically generate text such as news articles or product descriptions. It traces the evolution of generative AI, mentioning breakthroughs and models like GANs, VAEs, ChatGPT, DALL-E, Kubrick, and Journey.

3. Generative AI vs Conventional AI: Generative AI as its name suggests, generates new content. In contrast to this Conventional or Discriminative AI, which focuses on classifying or identifying content that is based on preexisting data.

4. Applications of Generative AI: It is applied in various domains, including natural language models (e.g., ChatGPT), text-to-image applications (e.g., Midjourney, DALL-E, Stable Diffusion), GANs for creative purposes (e.g., Audi wheel designs, film visual effects), VAEs for anomaly detection (e.g., fraud detection, industrial quality control), and more.

5. Popular Generative AI Tools: Notable generative AI tools are mentioned, such as ChatGPT, DALL-E, Midjourney, and Stable Diffusion. As a creative techie you can go to GitHub and create a notebook by choosing your favourite Generative AI model from the repo.

6. Future Predictions: It provides predictions for the next two to three years, foreseeing continued use of generative AI in computer graphics, animation, natural language understanding, energy optimization, transportation, and more. Longer-term predictions include applications in architecture, manufacturing, content creation, and a paradigm shift in the job market.

Gen AI, while offering significant benefits and opportunities across various fields, this also has some drawbacks and a potential for misuse. Here are several ways in which generative AI can be misused:

7. Impact on Jobs: The future of jobs is discussed, acknowledging the possibility of a shift in the job market. It’s normal for some jobs to disappear, while other new ones will be introduced.

8. Misuse of Gen AI: Deepfakes, Automated Harassment & Trolling, Misinformation & Fake News, Phishing & Fraud, Plagiarism, etc. It is very crucial to develop robust ethical guidelines, regulatory frameworks, and technological solutions that ensure the responsible use of generative AI.

#ArtificialIntelligenceForBusiness #GenerativeaAI #ArtificialIntelligence

SQL Planner, a monitoring tool for SQL Server for DBAs & Developers

SQL Planner is a Microsoft SQL Server monitoring Software product that helps DBA or Developer to identify issues (for ex. High CPU, Memory, Disk latency, Expensive query, Waits, Storage shortage, etc) and root cause analysis with a fast and deep level of analytical reports. Historical data is stored in the repository database for as many days as you want.

About SQL Planner monitoring tool, watch the intro here:

SQL Planner has several features under one roof:

– SQL Server Monitoring

– SQL Server Backup Restore Solution

– SQL Server Index Defragmentation Report and Solution

– SQL server Scripting solution

– DBA Handover Notes Management

Features and Capabilities:

SQL Planner is mainly built for the Monitoring feature and has several metrics as below, their details are available here:

– CPU & Memory Usage reports, Expensive Query and Procedure

– Nice visualization on CPU , Memory , IO usage , expensive query details

– Performance counters Reports

– IO Usage Analysis

– Deadlock & Blockers analysis

– Always On Monitoring

– SQL Server Waits analysis

– SQL Server Agent Job Analysis

– Missing Index analysis

– Storage analysis

– SQL Error Log Scan & Report

– Receiving Alerts: There are 50+ criteria when the Notification is sent via email and

maintained in SQL Planner dashboard too, more details here.

– Handover Notes Management

Cost:

Absolutely forever free to students , teachers and for developer/ DBA in development environment.

Some screenshots of the tool capabilities:

2021 blogging in review (Thank you & Happy New Year 2022 !!!)

Happy New Year 2022… from SQL with Manoj !!!

As WordPress.com Stats helper monkeys have stopped preparing annual report from last few years for the blogs hosted on their platform. So I started preparing my own Annual Report every end of the year to thank my readers for their support, feedback & motivation, and also to check & share the progress of this blog.

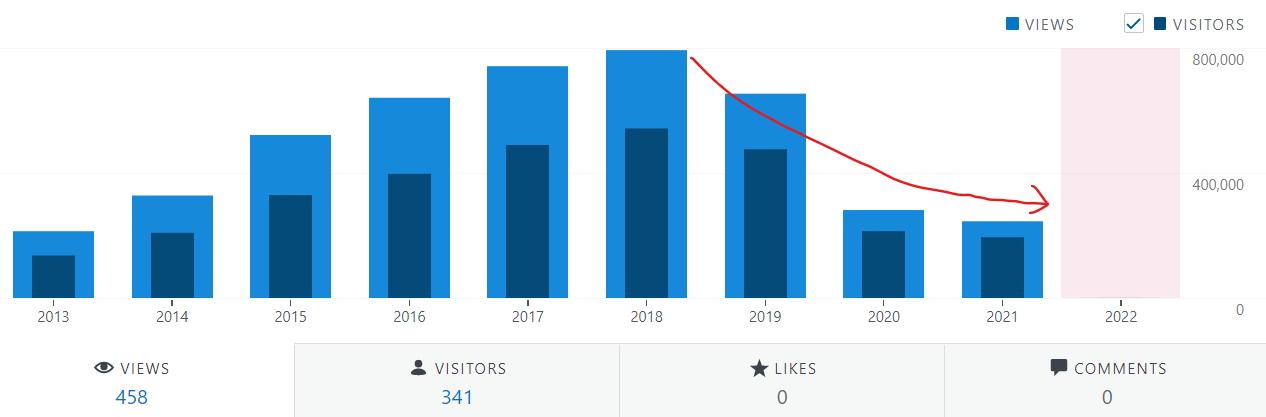

As I mentioned in my last year’s annual report I could not dedicate enough time to blog in year 2019 & 2020, so it also continued in 2021 with fewer posts. Thus, due to inactivity of 2-3 years, the blog hits are remaining below ~1k hits per day which is very low from what I was getting earlier (around 3k to 3.5k hits per day). So, you can see a drastic decline of hits in 2021 year in the image below.

–> Here are some Crunchy numbers from 2021:

The Louvre Museum has a foot fall of ~10 million visitors per year. This blog was viewed about 245,470 times by 194,886 unique visitors in 2021. If it were an exhibit at the Louvre Museum, it would take about ~50 days for that many people to see it.

There were 18 pictures uploaded, taking up a total of ~1 MB. That’s about ~1 pictures every month.

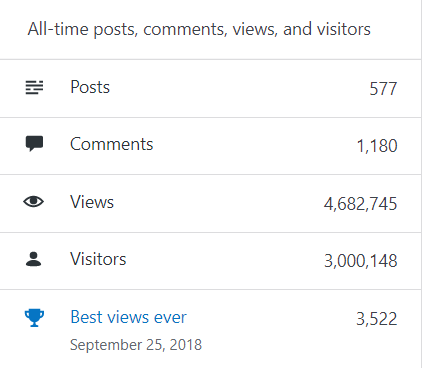

–> All-time posts, views, and visitors:

–> Posting Patterns:

In 2021, as I mentioned the reason above there were just 14 new posts, growing the total archive of this blog to 577 posts.

LONGEST STREAK: 4 post in April 2021

–> Attractions in 2021:

These are the top 5 posts that got most views in 2021:

0. Blog Home Page (30,424 views)

1. The server may be running out of resources, or the assembly may not be trusted with PERMISSION_SET = EXTERNAL_ACCESS or UNSAFE (12,151 views)

2. Reading JSON string with Nested array of elements (10,527 views)

3. Windows could not start SQL Server, error 17051, SQL Server Eval has expired (6,032 views)

4. Using IDENTITY function with SELECT statement in SQL Server (5,960 views)

5. SQL Server blocked access to procedure ‘dbo.sp_send_dbmail’ of component ‘Database Mail XPs’ (5,268 views)

–> How did they find me?

The top referring sites and search engines in 2021 were:

–> Where did they come from?

Out of 210 countries, top 5 visitors came from India, United States, United Kingdom, Canada and Australia:

–> Followers: 442

WordPress.com: 180

Email: 260

Facebook Page: 1,454

–> Alexa Rank (lower the better)

Global Rank: ~1m (as of 31st DEC 2021)

Previous rank: 835,163 (back in 2020)

–> YouTube Channel:

– SQLwithManoj on YouTube

– Total Subscribers: 19,675

– Total Videos: 80

–> 2022 New Year Resolution:

– Write at least 1 blog post every week or two.

– Write on new features in SQL Server 2022 and SQL/Data world.

– Started writing on Microsoft Big Data Platform, related to Azure Data Lake and Databricks (Spark/Scala), CosmosDB, etc. so I will continue to explore more on this area and write.

– Post at least 1 video every week on my YouTube channel

That’s all for 2021, see you in year 2022, all the best !!!

Connect me on Facebook, Twitter, LinkedIn, YouTube, Google, Email