Archive

Python – Delete/remove unwanted rows from a DataFrame

As you start using Python you will fall in love with it, as its very easy to solve problems by writing complex logic in very simple, short and quick way. Here we will see how to remove rows from a DataFrame based on an invalid List of items.

Let’s create a sample Pandas DataFrame for our demo purpose:

import pandas as pd

sampleData = {

'CustId': list(range(101, 111)),

'CustomerName': ['Cust'+str(x) for x in range(101, 111)]}

cdf = pd.DataFrame(sampleData)

invalidList = [102, 103, 104]

The above logic in line # 4 & 5 creates 10 records with CustID ranging from 101 to 110 and respective CustomerNames like Cust101, Cust102, etc.

In below code we will use isin() function to get us only the records present in Invalid list. So this will fetch us only 3 invalid records from the DataFrame:

df = cdf[cdf.CustId.isin(invalidList)] df

And to get the records not present in InvalidList we just need to use the “~” sign to do reverse of what we did in above step using the isin() function. So this will fetch us other 7 valid records from the DataFrame:

df = cdf[~cdf.CustId.isin(invalidList)] df

Python error – Length of passed values is 6, index implies 2 (while doing PIVOT with MultiIndex or multiple columns)

As I’m new to Python and these days using it for some Data Analysis & Metadata handling purpose, and also being from SQL background here I’m trying to use as many analysis features I use with SQL, like Group By, Aggregate functions, Filtering, Pivot, etc.

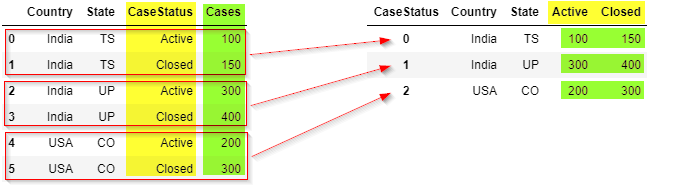

Now I had this particular requirement to PIVOT some columns based on multi-index keys, or multiple columns as shown below:

But while using PIVOT function in Python I was getting this weird error that I was not able to understand, because this error was not coming with the use of single index column.

ValueError: Length of passed values is 6, index implies 2.

Let’s create a sample Pandas DataFrame for our demo purpose:

import pandas as pd

sampleData = {

'Country': ['India', 'India', 'India','India','USA','USA'],

'State': ['UP','UP','TS','TS','CO','CO'],

'CaseStatus': ['Active','Closed','Active','Closed','Active','Closed'],

'Cases': [100, 150, 300, 400, 200, 300]}

df = pd.DataFrame(sampleData)

df

Problem/Issue:

But when I tried using the below code which uses pivot() function with multiple columns or multi-indexes it started throwing error. I was not getting error when I used single index/column below. So what could be the reason?

# Passing multi-index (or multiple columns) fails here:

df_pivot = df.pivot(index = ['Country','State'],

columns = 'CaseStatus',

values = 'Cases').reset_index()

df_pivot

Solution #1

Online checking I found that the pivot() function only accepts single column index key (do not accept multiple columns list as index). So, in this case first we would need to use set_index() function and set the list of columns as shown below:

# Use pivot() function with set_index() function:

df_pivot = (df.set_index(['Country', 'State'])

.pivot(columns='CaseStatus')['Cases']

.reset_index()

)

df_pivot

Solution #2

There is one more simple option where you can use pivot_table() function as shown below and get the desired output:

# or use pivot_table() function:

df_pivot = pd.pivot_table(df,

index = ['Country', 'State'],

columns = 'CaseStatus',

values = 'Cases')

df_pivot

Cosmos DB & PySpark – Retrieve all attributes from all Collections under all Databases

In one of my [previous post] we saw how to retrieve all attributes from the items (JSON document) of all Collections under all Databases by using C# .net code.

Here in this post we will see how we can retrieve the same information in Azure Databricks environment by using Python language instead of C# .net code.

So first of all you need to make sure that you have the Azure Cosmos DB SQL API library installed in your Databricks cluster. [Link if not done]

Then use the below script which:

1. First connects to Cosmos DB by using the CosmosClient() method.

2. Then it gets list of all Databases by using list_databases() method

3. Then iterate thru all databases and get list of all Containers by using list_containers() method.

4. Now again iterating thru all Containers and querying the items using the query_items() method.

5. The “metadataInfo” dictionary object is storing all the Keys & Values present in the Container item.

6. Then the List object with name “metadataList” stores all the Database, Container & Item level details stored in “metadataInfo” dictionary.

6. Finally we used the “metadataList” object to create a DataFrame by using createDataFrame() method.

Get the Cosmos Uri & Primary Key from the Cosmos DB Overview tab and apply in the code below:

import azure.cosmos.cosmos_client as cosmos_client

import azure.cosmos.errors as errors

import azure.cosmos.exceptions as exceptions

import azure.cosmos.http_constants as http_constants

import json

cosmosUri = "https://YourCosmosDBName.documents.azure.com:443/"

pKey = "PrimaryKey=="

client = cosmos_client.CosmosClient(cosmosUri, {'masterKey': pKey})

cosmosDBsList = client.list_databases()

#Create a list to store the metadata

metadataList = []

#Iterate over all DBs

for eachCosmosDBsList in cosmosDBsList:

#print("nDatabase Name: {}".format(eachCosmosDBsList['id']))

dbClient = client.get_database_client(eachCosmosDBsList['id'])

#Iterate over all Containers

for containersList in dbClient.list_containers():

#print("n- Container Name: {}".format(containersList['id']))

conClient = dbClient.get_container_client(containersList['id'])

#Query Container and read just TOP 1 row

for queryItems in conClient.query_items("select top 1 * from c",

enable_cross_partition_query=True):

for itemKey, itemValue in queryItems.items():

#print(itemKey, " = ", itemValue)

#Create a dictionary to store metedata info at attribute/field level

metadataInfo = {}

metadataInfo["Source"] = eachCosmosDBsList['id']

metadataInfo["Entity"] = containersList['id']

metadataInfo["Attribute"] = itemKey

metadataInfo["Value"] = itemValue

metadataList.append(metadataInfo)

#print(metadataList)

from pyspark.sql.types import *

mySchema = StructType([ StructField("Source", StringType(), True)

,StructField("Entity", StringType(), True)

,StructField("Attribute", StringType(), True)

,StructField("Value", StringType(), True)])

df = spark.createDataFrame(metadataList, schema=mySchema)

df.createOrReplaceTempView("metadataDF")

display(df)

Using Python in Azure Databricks with Cosmos DB – DDL & DML operations by using “Azure-Cosmos” library for Python

In one of my [previous post] we saw how to connect to Cosmos DB from Databricks by using the Apache Spark to Azure Cosmos DB connector. But that connector is limited to read and write data in Cosmos DB from Databricks compute using Scala language.

Here in this post we will see how can we do more in terms of managing the whole Cosmos DB databases, containers/collections and the items (JSON documents) from Databricks by using the Azure Cosmos DB SQL API SDK for Python.

Here we will perform some DDL & DML operations on Cosmos DB such as:

– Creating a new Database

– Creating a new Container

– Inserting new items

– Read items from Container

– Upserting/Updating items in Container

– Deleting items from Container

– Finally deleting the Container and Database

So first go to your Azure Databricks cluster, Libraries tab, click on Install New, on the popup select PyPI, and type “azure-cosmos” under Package text box, finally click the Install button. This will install the Azure Cosmos DB SQL API library and will show up in the Libraries tab.

Use the below sample code to import the required libraries and establish connection with Cosmos DB. You need to get the Cosmos Uri & Primary Key from the Cosmos DB Overview tab and apply in the code below:

import azure.cosmos.cosmos_client as cosmos_client

from azure.cosmos import CosmosClient, PartitionKey, exceptions

cosmosUri = 'https://YourCosmosDBName.documents.azure.com:443/'

pKey = 'MasterPrimaryKey'

client = cosmos_client.CosmosClient(cosmosUri, {'masterKey': pKey})

# 1. Create a new Database:

newDatabaseName = 'ManojDB'

newDatabase = client.create_database(newDatabaseName)

print('\n1. Database created with name: ', newDatabase.id)

# 2. Get Database properties

dbClient = client.get_database_client(newDatabaseName)

dbProperties = dbClient.read()

print('\n2. DB Properties: ', dbProperties)

# 3. Create a new Container:

newContainerName = 'ManojContainer'

newContainer = dbClient.create_container(id=newContainerName,

partition_key=PartitionKey(path="/id"))

print('\n3. Container created with name: ', newContainer.id)

# 4. Create items in the Container:

containerClient = dbClient.get_container_client(newContainerName)

item1 = {'id' : '101', 'empId': 101,

'empFirstName': 'Manoj', 'empLastName': 'Pandey'}

containerClient.create_item(item1)

item2 = {'id' : '102', 'empId': 102,

'empFirstName': 'Saurabh', 'empLastName': 'Sharma'}

containerClient.create_item(item2)

item3 = {'id' : '103', 'empId': 103,

'empFirstName': 'Hitesh', 'empLastName': 'Kumar'}

containerClient.create_item(item3)

print('\n4. Inserted 3 items in ', newContainer.id)

# 5. Read items from Container:

print('\n5. Get all 3 items from Container:')

for items in containerClient.query_items(

query='SELECT * FROM c',

enable_cross_partition_query = True):

print(items)

So till here we’ve created a Database & a Container in Cosmos DB, and inserted few items/records in it, as shown below:

Now we will do some more DML operations like UPSERT/UPDATE & DELETE items from the collections:

# 6. Update/Upsert a item in Container:

updateItem = {'id' : '103', 'empId': 103,

'empFirstName': 'Hitesh', 'empLastName': 'Chouhan'}

containerClient.upsert_item(updateItem)

print('\n6. Updated LastName of EmpId = 103:')

for items in containerClient.query_items(

query='SELECT * FROM c WHERE c.empId = 103',

enable_cross_partition_query = True):

print(items)

# 7. Delete an item from Container:

print('\n7. Delete item/record with EmpId = 103:')

for items in containerClient.query_items(

query='SELECT * FROM c WHERE c.empId = 103',

enable_cross_partition_query = True):

containerClient.delete_item(items, partition_key='103')

for items in containerClient.query_items(

query='SELECT * FROM c',

enable_cross_partition_query = True):

print(items)

Finally we will clean up all the stuff by deleting the Container and Databases that we created initially:

# 8. Delete Container

dbClient.delete_container(newContainer)

print('\n8. Deleted Container ', newContainer)

# 9. Delete Database

client.delete_database(newDatabaseName)

print('\n9. Deleted Database ', newDatabaseName)

Python error: while converting Pandas Dataframe or Python List to Spark Dataframe (Can not merge type)

Data typecasting errors are common when you are working with different DataFrames across different languages, like here in this case I got datatype mixing error between Pandas & Spark dataframe:

import pandas as pd

pd_df = pd.DataFrame([(101, 'abc'),

('def', 201),

('xyz', 'pqr')],

columns=['col1', 'col2'])

df = spark.createDataFrame(pd_df)

display(df)

TypeError: field col1: Can not merge type <class 'pyspark.sql.types.longtype'> and <class 'pyspark.sql.types.stringtype'>

While converting the Pandas DataFrame to Spark DataFrame its throwing error as Spark is not able to infer correct data type for the columns due to mix type of data in columns.

In this case you just need to explicitly tell Spark to use a correct datatype by creating a new schema and using it in createDataFrame() definition shown below:

import pandas as pd

pd_df = pd.DataFrame([(101, 'abc'),

('def', 201),

('xyz', 'pqr')],

columns=['col1', 'col2'])

from pyspark.sql.types import *

df_schema = StructType([StructField("col1", StringType(), True)\

,StructField("col2", StringType(), True)])

df = spark.createDataFrame(pd_df, schema=df_schema)

display(df)