Archive

SET STATISTICS IO and TIME – What are Logical, Physical and Read-Ahead Reads?

In my previous post [link] I talked about SET STATISTICS IO and Scan Count.

Here in this post I will go ahead and talk about Page Reads, i.e Logical Reads and Physical Reads.

–> Type of Reads and their meaning:

– Logical Reads: are the number of 8k Pages read from the Data Cache. These Pages are placed in Data Cache by Physical Reads or Read-Ahead Reads.

– Physical Reads: are the Number of 8k Pages read from the Disk if they are not in Data Cache. Once in Data Cache they (Pages) are read by Logical Reads and Physical Reads do not (or minimally) happen for same set of queries.

– Read-Ahead Reads: are the number of 8k Pages pre-read from the Disk and placed into the Data Cache. These are a kind of advance Physical Reads, as they bring the Pages in advance to the Data Cache where the need for Data/Index pages in anticipated by the query.

–> Let’s go by some T-SQL Code examples to make it more simple to understand:

USE [AdventureWorks2012] GO -- We will crate a new table here and populate records from AdventureWorks's Person.Person table: SELECT BusinessEntityID, Title, FirstName, MiddleName, LastName, Suffix, EmailPromotion, ModifiedDate INTO dbo.Person FROM [Person].[Person] GO -- Let's Clear up the Data Cache or memory-buffer: DBCC FREEPROCCACHE DBCC DROPCLEANBUFFERS GO -- Run the following block of T-SQL statements: SET STATISTICS IO ON SET STATISTICS TIME ON SELECT * FROM dbo.Person SELECT * FROM dbo.Person SET STATISTICS IO OFF SET STATISTICS TIME OFF GO

Output Message: (19972 row(s) affected) Table 'Person'. Scan count 1, logical reads 148, physical reads 0, read-ahead reads 148, lob logical reads 0, lob physical reads 0, lob read-ahead reads 0. SQL Server Execution Times: CPU time = 31 ms, elapsed time = 297 ms. (19972 row(s) affected) Table 'Person'. Scan count 1, logical reads 148, physical reads 0, read-ahead reads 0, lob logical reads 0, lob physical reads 0, lob read-ahead reads 0. SQL Server Execution Times: CPU time = 16 ms, elapsed time = 186ms.

The Output Message above shows different number of Reads for both the queries when ran twice.

– On first execution of the Query, the Data Cache was empty so the query had to do a Physical Read. But as the optimizer already knows that all records needs to be fetched, so by the time query plan is created the DB Engine pre-fetches all records into memory by doing Read-Ahead Read operation. Thus, here we can see zero Physical Reads and 148 Read-Ahead Reads. After records comes into Data Cache, the DB Engine pulls the Records from Logical Reads operation, which is again 148.

– On second execution of the Query, the Data Cache is already populated with the Data Pages so there is no need to do Physical Reads or Read-Ahead Reads. Thus, here we can see zero Physical & Read-Ahead Reads. As the records are already in Data Cache, the DB Engine pulls the Records from Logical Reads operation, which is same as before 148.

Note: You can also see the performance gain by Data Caching, as the CPU Time has gone down to 16ms from 31ms and Elapsed Time to 186ms from 297ms.

–> At our Database end we can check how many Pages are in the Disk for the Table [dbo].[Person].

Running below DBCC IND statement will pull number of records equal to number of Pages it Cached above, i.e. 148:

DBCC IND('AdventureWorks2012','Person',-1)

GO

The above statement pulls up 149 records, 1st record is the IAM Page and rest 148 are the Data Pages of the Table that were Cached into the buffer pool.

–> We can also check at the Data-Cache end how many 8k Pages are in the Data-Cache, before-Caching and after-Caching:

-- Let's Clear up the Data Cache again: DBCC FREEPROCCACHE DBCC DROPCLEANBUFFERS GO -- Run this DMV Query, this time it will run on Cold Cache, and will return information from the pre-Cache'd data: ;WITH s_obj as ( SELECT OBJECT_NAME(OBJECT_ID) AS name, index_id ,allocation_unit_id, OBJECT_ID FROM sys.allocation_units AS au INNER JOIN sys.partitions AS p ON au.container_id = p.hobt_id AND (au.type = 1 OR au.type = 3) UNION ALL SELECT OBJECT_NAME(OBJECT_ID) AS name, index_id, allocation_unit_id, OBJECT_ID FROM sys.allocation_units AS au INNER JOIN sys.partitions AS p ON au.container_id = p.partition_id AND au.type = 2 ), obj as ( SELECT s_obj.name, s_obj.index_id, s_obj.allocation_unit_id, s_obj.OBJECT_ID, i.name IndexName, i.type_desc IndexTypeDesc FROM s_obj INNER JOIN sys.indexes i ON i.index_id = s_obj.index_id AND i.OBJECT_ID = s_obj.OBJECT_ID ) SELECT COUNT(*) AS cached_pages_count, obj.name AS BaseTableName, IndexName, IndexTypeDesc FROM sys.dm_os_buffer_descriptors AS bd INNER JOIN obj ON bd.allocation_unit_id = obj.allocation_unit_id INNER JOIN sys.tables t ON t.object_id = obj.OBJECT_ID WHERE database_id = DB_ID() AND obj.name = 'Person' AND schema_name(t.schema_id) = 'dbo' GROUP BY obj.name, index_id, IndexName, IndexTypeDesc ORDER BY cached_pages_count DESC; -- Run following Query, this will populate the Data Cache: SELECT * FROM dbo.Person -- Now run the previous DMV Query again, this time it will run on Cache'd Data and will return information of the Cache'd Pages:

Query Output: -> First DMV Query Run: cached_pages_count BaseTableName IndexName IndexTypeDesc 2 Person NULL HEAP -> Second DMV Query Run: cached_pages_count BaseTableName IndexName IndexTypeDesc 150 Person NULL HEAP

So, by checking the above Output you can clearly see that only 2 Pages were there before-Caching.

And after executing the Query on table [dbo].[Person] the Data Pages got Cache’d into the buffer pool. And on running the same DMV query again you get the number of Cache’d Pages in the Data Cache, i.e same 148 Pages (150-2).

-- Final Cleanup DROP TABLE dbo.Person GO

>> Check & Subscribe my [YouTube videos] on SQL Server.

SQL Server 2012 | Temp Tables are created with negative Object IDs

These days I’m working on SQL Server upgrade from 2008 R2 to 2012 for one of our project module.

Today while working on it I got blocked while installing a Build. The build was failing with following error:

Error SQL72014: .Net SqlClient Data Provider: Msg 2714, Level 16, State 6, Line 115 There is already an object named ‘#temp’ in the database.

I checked the code and found the line where it was failing:

IF object_id('tempdb.dbo.#temp') > 0

DROP TABLE #temp

I checked this code with SQL Server 2008 R2 and it was working perfectly.

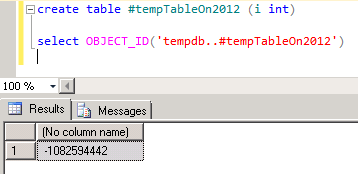

So to check and validate this I created a temp-table on SQL Server 2012 and found that it is created by negative Object ID, check this:

This is a new change done with SQL 2012 version, but this is not mentioned anywhere in MSDN BOL.

So, to make this legacy code work we have to re-factor all such cases, by:

IF object_id('tempdb.dbo.#temp') IS NOT NULL

DROP TABLE #temp

Confirmation form Microsoft SQL team blog [CSS SQL Server Engineers]:

“in SQL Server 2012, we made a conscious change to the algorithm so that objectids for user-defined temporary tables would be a particular range of values. Most of the time we use hex arithmetic to define these ranges and for this new algorithm these hex values spill into a specific set of negative numbers for object_id, which is a signed integer or LONG type. So in SQL Server 2012, you will now always see object_id values < 0 for user-defined temp tables when looking at a catalog view like sys.objects.”

More Info on: http://blogs.msdn.com/b/psssql/archive/2012/09/09/revisiting-inside-tempdb.aspx

Passing multiple/dynamic values to Stored Procedures & Functions | Part 4 – by using TVP

This is the last fourth part of this series, in previous posts we talked about passing multiple values by following approaches: CSV, XML, #table. Here we will use a new feature introduced in SQL Server 2008, i.e. TVP (Table Valued Parameters).

As per MS BOL, TVPs are declared by using user-defined table types. We can use TVPs to send multiple rows of data to Stored Procedure or Functions, without creating a temporary table or many parameters. TVPs are passed by reference to the routines thus avoiding copy of the input data.

Let’s check how we can make use of this new feature (TVP):

-- First create a User-Defined Table type with a column that will store multiple values as multiple records:

CREATE TYPE dbo.tvpNamesList AS TABLE

(

Name NVARCHAR(100) NOT NULL,

PRIMARY KEY (Name)

)

GO

-- Create the SP and use the User-Defined Table type created above and declare it as a parameter:

CREATE PROCEDURE uspGetPersonDetailsTVP (

@tvpNames tvpNamesList READONLY

)

AS

BEGIN

SELECT BusinessEntityID, Title, FirstName, MiddleName, LastName, ModifiedDate

FROM [Person].[Person] PER

WHERE EXISTS (SELECT Name FROM @tvpNames tmp WHERE tmp.Name = PER.FirstName)

ORDER BY FirstName, LastName

END

GO

-- Now, create a Table Variable of type created above:

DECLARE @tblPersons AS tvpNamesList

INSERT INTO @tblPersons

SELECT Names FROM (VALUES ('Charles'), ('Jade'), ('Jim'), ('Luke'), ('Ken') ) AS T(Names)

-- Pass this table variable as parameter to the SP:

EXEC uspGetPersonDetailsTVP @tblPersons

GO

-- Check the output, objective achieved 🙂

-- Final Cleanup

DROP PROCEDURE uspGetPersonDetailsTVP

GO

So, we saw how we can use TVPs with Stored Procedures, similar to this they are used with UDFs.

TVPs are a great way to pass array of values as a single parameter to SPs and UDFs. There is lot of know and understand about TVP, their benefits and usage, check this [link].

Passing multiple/dynamic values to Stored Procedures & Functions | Part 3 – by using #table

In my previous posts we saw how to pass multiple values to an SP by using CSV list and XML data, which are almost of same type. Here in this post we will see how we can achieve the same objective without passing values as parameters and by using temporary (temp, #) tables.

Here in the third part of this series the Stored Procedure will be created in such a way that it will use a Temporary Table, which does not exist in compile time. But at run time the temp-table should be created before running the SP. In this approach there is no need to pass any parameter with the SP, let’s see how:

-- Create Stored Procedure with no parameter, it will use the temp table created outside the SP:

CREATE PROCEDURE uspGetPersonDetailsTmpTbl

AS

BEGIN

SELECT BusinessEntityID, Title, FirstName, MiddleName, LastName, ModifiedDate

FROM [Person].[Person] PER

WHERE EXISTS (SELECT Name FROM #tblPersons tmp WHERE tmp.Name = PER.FirstName)

ORDER BY FirstName, LastName

END

GO

-- Now, create a temp table, insert records with same set of values we used in previous 2 posts:

CREATE TABLE #tblPersons (Name NVARCHAR(100))

INSERT INTO #tblPersons

SELECT Names FROM (VALUES ('Charles'), ('Jade'), ('Jim'), ('Luke'), ('Ken') ) AS T(Names)

-- Now execute the SP, it will use the above records as input and give you required results:

EXEC uspGetPersonDetailsTmpTbl

-- Check the output, objective achieved 🙂

DROP TABLE #tblPersons

GO

-- Final Cleanup

DROP PROCEDURE uspGetPersonDetailsTmpTbl

GO

This approach is much better than the previous 2 approaches of using CSV or XML data.

The values will be entered in temp tables and then will be accessed inside the SP. There is no parsing involved as the records are directly read from temp table and used in SQL query just like normal queries. Also there is no limit of records if you compare with CSVs & XML data.

But, the catch here is, the temp table should exist before executing the SP, if for some reason it is not there the code will crash. But its rare and can be handled by some checks.

In my next and last [blog post] of this series we will see a new feature of SQL Server 2008 i.e. TVP, which can be used in such type of scenarios, check here.

Passing multiple/dynamic values to Stored Procedures & Functions | Part 2 – by passing XML

In my previous post [Part 1] we saw how to pass multiple values to a parameter as a CSV string in an SP.

Here in the second part of this series we will use XML string that will contain the set of values and pass as an XML param variable to the SP. Then inside the SP we will parse this XML and use those values in our SQL Queries, just like we did in previous post with CSV string:

USE [AdventureWorks2012]

GO

-- Create an SP with XML type parameter:

CREATE PROCEDURE uspGetPersonDetailsXML (

@persons XML

)

AS

BEGIN

--DECLARE @persons XML

--SET @persons = '<root><Name>Charles</Name><Name>Jade</Name><Name>Jim</Name><Name>Luke</Name><Name>Ken</Name></root>'

SELECT T.C.value('.', 'NVARCHAR(100)') AS [Name]

INTO #tblPersons

FROM @persons.nodes('/root/Name') as T(C)

SELECT BusinessEntityID, Title, FirstName, MiddleName, LastName, ModifiedDate

FROM [Person].[Person] PER

WHERE EXISTS (SELECT Name FROM #tblPersons tmp WHERE tmp.Name = PER.FirstName)

ORDER BY FirstName, LastName

DROP TABLE #tblPersons

END

GO

-- Create XML string:

DECLARE @xml XML

SET @xml = '<root>

<Name>Charles</Name>

<Name>Jade</Name>

<Name>Jim</Name>

<Name>Luke</Name>

<Name>Ken</Name>

</root>'

-- Use the XML string as parameter which calling the SP:

EXEC uspGetPersonDetailsXML @xml

GO

-- Check the output, objective achieved 🙂

-- Final Cleanup

DROP PROCEDURE uspGetPersonDetailsXML

GO

This approach looks much cleaner and more stable to me than the previous one (CSV). XML is a de-facto standard to store and transmit data in a more structured way. Thus I prefer XML string over the CSV string on these type of cases.

The CSV approach is no different than this one, it internally converts the CSV string to an XML then parse it. Thus, like CSV if the XML string becomes lengthy it will also take time to parse the whole XML and then use the values in SQL queries in the SP.

In next [blog post] we will see how we can perform the same operation by using temporary (temp, #) tables.