Archive

New in-built Table-Valued Function STRING_SPLIT() in SQL Server 2016 – to split strings

Till now it was bit tricky to split a Sentence or CSV String to multiple values or rows, and we used different logic to do the same.

In my [previous post] I blogged similar logic to Split a String and Combine back by using some XML syntax.

In SQL Server 2016 this has been made simpler by using a new function STRING_SPLIT(), let’s see this with a simple example:

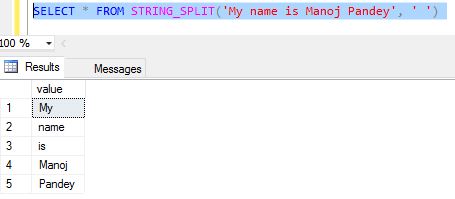

SELECT * FROM STRING_SPLIT('My name is Manoj Pandey', ' ')

This will split all the words in the sentence separated by a whitespace in different rows:

Here is the syntax for the same:

STRING_SPLIT ( string , separator )

Please note: that the separator should be a single character expression, so this should not be an empty string, like:

SELECT * FROM STRING_SPLIT('My name is Manoj Pandey', '')

Will result into an error:

Msg 214, Level 16, State 11, Line 3

Procedure expects parameter ‘separator’ of type ‘nchar(1)/nvarchar(1)’.

–> Let’s check one more example:

We have a comma separated Cities list for each State as a row in a table:

CREATE TABLE #tempCityState ( [State] VARCHAR(5), [Cities] VARCHAR(50) ) INSERT INTO #tempCityState SELECT 'AK', 'Nashville,Wynne' UNION ALL SELECT 'CA', 'Fremont,Hanford,Los Anggeles' UNION ALL SELECT 'CO', 'Aspen,Denver,Teluride,Vail'

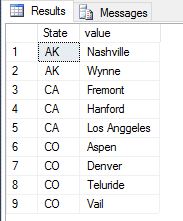

Now, lets just use the simple function STRING_SPLIT() with CROSS APPLY operator, like:

SELECT [State], value FROM #tempCityState CROSS APPLY STRING_SPLIT([Cities], ',')

Will give you following output:

–> And if I compare the performance of this function with the earlier approach I mentioned in my [previous post]:

Run both the queries by enabling Actual Execution plan (Ctrl + M):

SELECT [State], value

FROM #tempCityState

CROSS APPLY STRING_SPLIT([Cities], ',')

SELECT A.[State], Split.a.value('.', 'VARCHAR(100)') AS City

FROM (SELECT [State], CAST ('<M>' + REPLACE([Cities], ',', '</M><M>') + '</M>' AS XML) AS String

FROM #tempCityState) AS A

CROSS APPLY String.nodes ('/M') AS Split(a)

ORDER BY 1,2

I can see that the STRING_SPLIT() gives me better performance compared to the other:

SQL Trivia – Difference between COUNT(*) and COUNT(1)

Yesterday I was having a discussion with one of the Analyst regarding an item we were going to ship in the release. And we tried to check and validate the data if it was getting populated correctly or not. To just get the count-diff of records in pre & post release I used this Query:

SELECT COUNT(*) FROM tblXYZ

To my surprise he mentioned to use COUNT(1) instead of COUNT(*), and the reason he cited was that it runs faster as it uses one column and COUNT(*) uses all columns. It was like a weird feeling, what to say… and I just mentioned “It’s not, and both are same”. He was adamant and disagreed with me. So I just kept quite and keep on using COUNT(*) 🙂

But are they really same or different? Functionally? Performance wise? or by any other means?

Let’s check both of them.

The MSDN BoL lists the syntax as COUNT ( { [ [ ALL | DISTINCT ] expression ] | * } )

So, if you specify any numeric value it come under the expression option above.

Let’s try to pass the value as 1/0, if SQL engine uses this value it would definitely throw a “divide by zero” error:

SELECT COUNT(1/0) FROM [Person].[Person]

… but it does not. Because it just ignores the value while taking counts. So, both * and 1 or any other number is same.

–> Ok, let’s check the Query plans:

and there was no difference between the Query plans created by them, both have same query cost of 50%.

–> These are very simple and small queries so the above plan might be trivial and thus may have come out same or similar.

So, let’s check more, like the PROFILE stats:

SET STATISTICS PROFILE ON SET STATISTICS IO ON SELECT COUNT(*) FROM [Sales].[SalesOrderDetail] SELECT COUNT(1) FROM [Sales].[SalesOrderDetail] SET STATISTICS PROFILE OFF SET STATISTICS IO OFF

If you check the results below, the PROFILE data of both the queries shows COUNT(*), so the SQL engine converts COUNT(1) to COUNT(*) internally.

SELECT COUNT(*) FROM [Sales].[SalesOrderDetail]

|--Compute Scalar(DEFINE:([Expr1002]=CONVERT_IMPLICIT(int,[Expr1003],0)))

|--Stream Aggregate(DEFINE:([Expr1003]=Count(*)))

|--Index Scan(OBJECT:([AdventureWorks2014].[Sales].[SalesOrderDetail].[IX_SalesOrderDetail_ProductID]))

SELECT COUNT(1) FROM [Sales].[SalesOrderDetail]

|--Compute Scalar(DEFINE:([Expr1002]=CONVERT_IMPLICIT(int,[Expr1003],0)))

|--Stream Aggregate(DEFINE:([Expr1003]=Count(*)))

|--Index Scan(OBJECT:([AdventureWorks2014].[Sales].[SalesOrderDetail].[IX_SalesOrderDetail_ProductID]))

–> On checking the I/O stats there is no difference between them:

Table 'SalesOrderDetail'. Scan count 1, logical reads 276, physical reads 1, read-ahead reads 288, lob logical reads 0, lob physical reads 0, lob read-ahead reads 0. Table 'SalesOrderDetail'. Scan count 1, logical reads 276, physical reads 0, read-ahead reads 0, lob logical reads 0, lob physical reads 0, lob read-ahead reads 0.

Both the queries does reads of 276 pages, no matter they did logical/physical/read-ahead reads here. Check difference b/w logical/physical/read-ahead reads.

So, we can clearly and without any doubt say that both COUNT(*) & COUNT(1) are same and equivalent.

There are few other things in SQL Server that are functionally equivalent, like DECIMAL & NUMERIC datatypes, check here: Difference b/w DECIMAL & NUMERIC datatypes.

SQL Error – The database owner SID recorded in the master database differs from the database owner SID

Today we got an email from our support team that they are not able to execute Stored Procedures on a particular Database and getting following error:

The server principal “domain\user” is not able to access the database “dbXYZ” under the current security context.

The database owner SID recorded in the master database differs from the database owner SID recorded in database ‘dbXYZ’. You should correct this situation by resetting the owner of database ‘dbXYZ’ using the ALTER AUTHORIZATION statement.

The Database was restored by a backup from Production box to a separate Dev box. I checked the Properties of the Database and the Owner property shows the Prod service account, which cannot be created on Dev machine.

So, we just need to change the Owner Name of the database by using “ALTER AUTHORIZATION” statement of sp_changedbowner system SP, as shown below:

USE [DatabaseName] GO -- Option #1 EXEC sp_changedbowner 'sa' GO -- OR-- -- Option #2 ALTER AUTHORIZATION ON DATABASE::[DatabaseName] TO [sa] GO

And the issue got resolved !!!

SQL DBA – Database restored successfully, but stuck in (Restoring…) state

Today after restoring a database on my DEV machine from a PROD backup, I observed that the database was still showing as Restoring… in Object Explorer, and cannot be used. I again checked in SSMS results pane and it was showing as a successful restore with below message:

100 percent processed.

Processed 21713736 pages for database ‘dbName’, file ‘dbName_Data’ on file 1.

Processed 398 pages for database ‘dbName’, file ‘dbName_log’ on file 1.

RESTORE DATABASE successfully processed 21714134 pages in 506.682 seconds (334.808 MB/sec).

I checked online and found that I was using WITH NO RECOVERY option with my RESTORE DATABASE statement, like:

RESTORE DATABASE db_name WITH NO RECOVERY

… and by using above option, you make the Database to be unused, and allow more Roll Forward actions to continue with the next RESTORE DATABASE statements in sequence.

As this was not my intention, so I can simply mark by Database to stop accepting more Transaction Logs, by forcing the database out of Restoring state, by issuing following statement:

RESTORE DATABASE dbName WITH RECOVERY

Converting database ‘dbName’ from version 706 to the current version 852.

Database ‘dbName’ running the upgrade step from version 706 to version 770.

…

Database ‘dbName’ running the upgrade step from version 851 to version 852.

RESTORE DATABASE successfully processed 0 pages in 1.716 seconds (0.000 MB/sec).

… and my database was out from Restoring… state, and I could use it now !!!

Store JSON data in a table, OPENJSON and JSON_Value functions | SQL Server 2016 – Part 4

In my previous posts I talked about how to [export] a Table or Query data into JSON string format, and [read it back] from JSON string to Relational-table format, and with [nested elements].

Here, in this post I’ll show how we can store JSON data in a normal table column, just like you store XML data.

XML data is stored in a column of XML datatype which also check the validity of the XML data to be stored. But to store JSON data there is no new datatype introduced, JSON can be stored in an NVARCHAR datatype column just like a plain text, and to validate it you can add a CHECK constraint on it.

IsJSON() function: can be used as a CHECK constraint on the columns that contain JSON string which will validate if the JSON string is in proper format or not.

As we will need AdvantureWorks2014 Sample Database in our example below, we need to upgrade its Compatibility from SQL 2014 to SQL 2016, i.e. from level 120 to 130, like:

USE [master] GO ALTER DATABASE [AdventureWorks2014] SET COMPATIBILITY_LEVEL = 130 GO

You can download AdvantureWorks2014 sample Database from Microsoft [CodePlex site].

–> Ok, now let’s create a new Table with OrderDetailsJSON column for storing JSON string with a CHECK constraint on it:

USE [AdventureWorks2014] GO CREATE TABLE CustomerOrder ( BusinessEntityID INT, FirstName NVARCHAR(50), MiddleName NVARCHAR(50), LastName NVARCHAR(50), EmailPromotion INT, OrderDetailsJSON NVARCHAR(MAX) -- normal column with NVARCHAR datatype CHECK ( IsJSON ( OrderDetailsJSON ) = 1 ) -- CHECK Constraint to validate JSON string )

–> Let’s create a sample record-set with JSON data in OrderDetailsJSON column. We will use FOR JSON AUTO option to convert relational data to JSON string for our example, as shown below:

;WITH CTE_PersonContact AS ( SELECT BusinessEntityID, FirstName, MiddleName, LastName, EmailPromotion, OrderDetailsJSON = ( SELECT SalesOrderID, OrderDate, SubTotal, TaxAmt, TotalDue FROM [AdventureWorks2014].[Sales].[SalesOrderHeader] S WHERE S.CustomerID = P.BusinessEntityID FOR JSON AUTO -- here ) -- our JSON column FROM [Person].[Person] P ) INSERT INTO CustomerOrder SELECT BusinessEntityID, FirstName, MiddleName, LastName, EmailPromotion, OrderDetailsJSON FROM CTE_PersonContact WHERE OrderDetailsJSON IS NOT NULL -- (9778 row(s) affected)

–> Check the above inserted records with the OrderDetailsJSON column containing data in JSON format:

SELECT * FROM CustomerOrder -- (9778 row(s) affected)

–> Let’s Query back the JSON data from the OrderDetailsJSON column with other columns in relational form, by using OPENJSON() function. As for each Customer it can contain multiple orders we will get multiple rows for each Customer and multiple columns as per defined in the JSON string:

SELECT C.BusinessEntityID, C.FirstName, C.MiddleName, C.LastName, C.EmailPromotion, J.SalesOrderID, J.OrderDate, J.SubTotal, J.TaxAmt, J.TotalDue FROM CustomerOrder C CROSS APPLY OPENJSON (OrderDetailsJSON) WITH ( SalesOrderID INT, OrderDate DATETIME, SubTotal MONEY, TaxAmt MONEY, TotalDue MONEY ) AS J -- (17463 row(s) affected)

–> And if you want to get just one Order per Customer then you can use following Query, by using JSON_Value() function and by specifying the array key pointer/position to get the first value fro the array:

SELECT C.BusinessEntityID, C.FirstName, C.MiddleName, C.LastName, C.EmailPromotion, SalesOrderID = JSON_Value (OrderDetailsJSON, '$[0].SalesOrderID'), OrderDate = JSON_Value (OrderDetailsJSON, '$[0].OrderDate'), SubTotal = JSON_Value (OrderDetailsJSON, '$[0].SubTotal'), TaxAmt = JSON_Value (OrderDetailsJSON, '$[0].TaxAmt'), TotalDue = JSON_Value (OrderDetailsJSON, '$[0].TotalDue') FROM CustomerOrder C -- (9778 row(s) affected)

We can also use JSON string for passing multiple values from a single parameter in an Stored Procedure to implement dynamic parameters functionality as a workaround, check the [blog post] for the same.